ALBUQUERQUE, N.M. — Sandia National Laboratories researchers have created a method of processing 3D images for computer simulations that could have beneficial implications for several industries, including health care, manufacturing and electric vehicles.

At Sandia, the method could prove vital in certifying the credibility of high-performance computer simulations used in determining the effectiveness of various materials for weapons programs and other efforts, said Scott A. Roberts, Sandia’s principal investigator on the project. Sandia can also use the new 3D-imaging workflow to test and optimize batteries used for large-scale energy storage and in vehicles.

“It’s really consistent with Sandia’s mission to do credible, high-consequence computer simulation,” he said. “We don’t want to just give you an answer and say, ‘trust us.’ We’re going to say, ‘here’s our answer and here’s how confident we are in that answer,’ so that you can make informed decisions.”

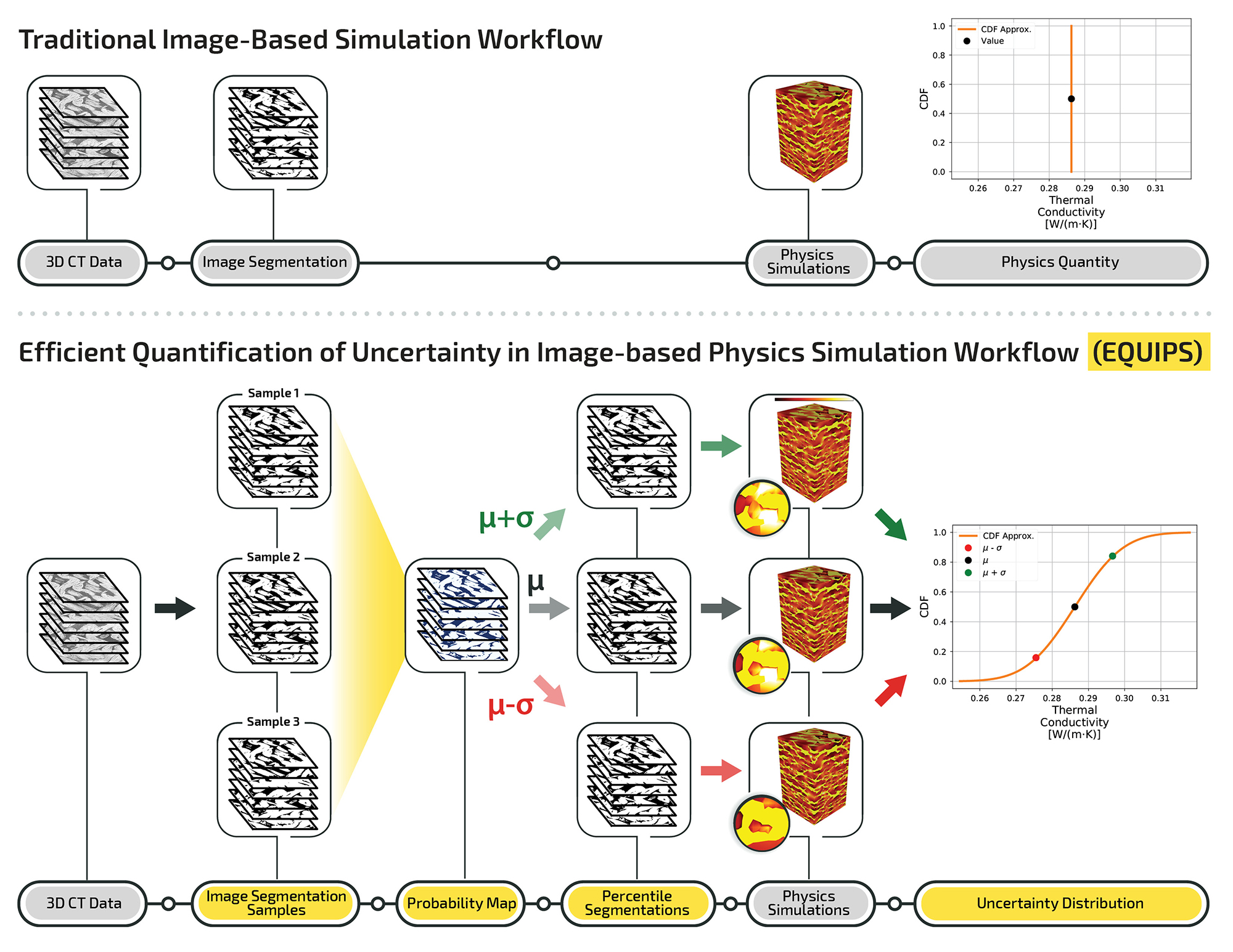

The researchers shared the new workflow, dubbed by the team as EQUIPS for Efficient Quantification of Uncertainty in Image-based Physics Simulation, in a paper published today in the journal Nature Communications.

“This workflow leads to more reliable results by exploring the effect that ambiguous object boundaries in a scanned image have in simulations,” said Michael Krygier, a Sandia postdoctoral appointee and lead author on the paper. “Instead of using one interpretation of that boundary, we’re suggesting you need to perform simulations using different interpretations of the boundary to reach a more informed decision.”

EQUIPS can use machine learning to quantify the uncertainty in how an image is drawn for 3D computer simulations. By giving a range of uncertainty, the workflow allows decision-makers to consider best- and worst-case outcomes, Roberts said.

Workflow EQUIPS decision-makers with better information

Think of a doctor examining a CT scan to create a cancer treatment plan. That scan can be rendered into a 3D image, which can then be used in a computer simulation to create a radiation dose that will efficiently treat a tumor without unnecessarily damaging surrounding tissue. Normally, the simulation would produce one result because the 3D image was rendered once, said Carianne Martinez, a Sandia computer scientist.

But, drawing object boundaries in a scan can be difficult and there is more than one sensible way to do so, she said. “CT scans aren’t perfect images. It can be hard to see boundaries in some of these images.”

Humans and machines will draw different but reasonable interpretations of the tumor’s size and shape from those blurry images, Krygier said.

Using the EQUIPS workflow, which can use machine learning to automate the drawing process, the 3D image is rendered into many viable variations showing size and location of a potential tumor. Those different renderings will produce a range of different simulation outcomes, Martinez said. Instead of one answer, the doctor will have a range of prognoses to consider that can affect risk assessments and treatment decisions, be they chemotherapy or surgery.

“When you’re working with real-world data there is not a single-point solution,” Roberts said. “If I want to be really confident in an answer, I need to understand that the value can be anywhere between two points, and I’m going to make decisions based on knowing it’s somewhere in this range not just thinking it’s at one point.”

It’s a question of segmentation

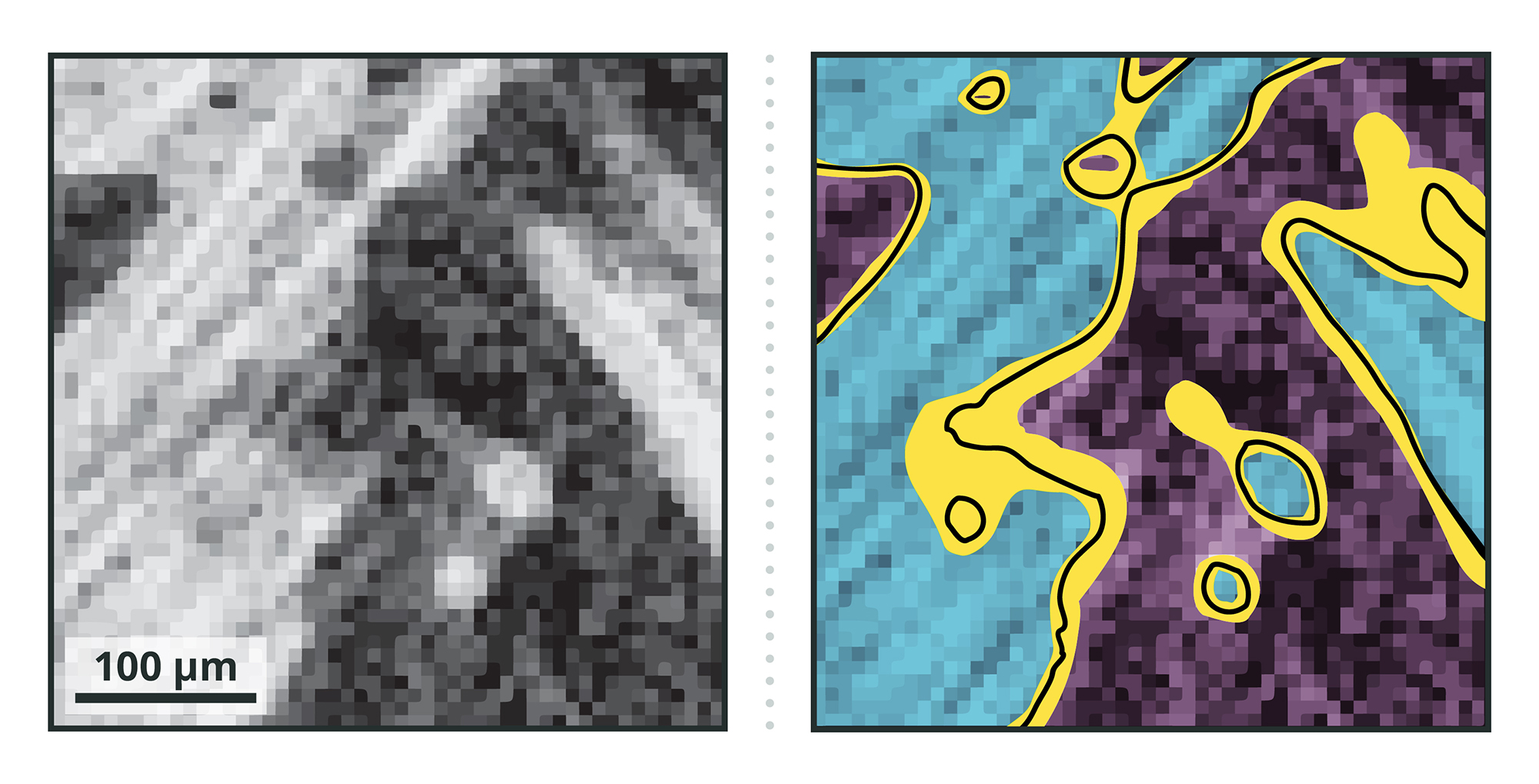

The first step of image-based simulation is the image segmentation, or put simply, deciding which pixel (voxel in a 3D image) to assign to each object and therefore drawing the boundary between two objects. From there, scientists can begin to build models for computational simulation. But pixels and voxels will blend with gradual gradient changes, so it is not always clear where to draw the boundary line — the gray areas in a black and white CT scan or X-ray, Krygier said.

The inherent problem with segmenting a scanned image is that whether it’s done by a person using the best software tools available or with the latest in machine learning capabilities there are many plausible ways to assign the pixels to the objects, he said.

Two people performing segmentation on the same image are likely to choose a different combination of filtering and techniques leading to different but still valid segmentations. There is no reason to favor one image segmentation over another. It’s the same with advanced machine learning techniques. While it can be quicker, more consistent and more accurate than manual segmentation, different computer neural networks use varying inputs and work on different parameters. Therefore, they can produce different but still valid segmentations, Martinez said.

Sandia’s EQUIPS workflow does not eliminate such segmentation uncertainty, but it improves the credibility of the final simulations by making the previously unrecognized uncertainty visible to the decision-maker, Krygier said.

EQUIPS can employ two types of machine learning techniques — Monte Carlo Dropout Networks and Bayesian Convolutional Neural Networks — to perform image segmentation, with both approaches creating a set of image segmentation samples. These samples are combined to map the probability that a certain pixel or voxel is in the segmented material. To explore the impact of segmentation uncertainty, EQUIPS creates a probability map to obtain segmentations, which are then used to perform multiple simulations and calculate uncertainty distributions.

Funded by Sandia’s Laboratory Directed Research and Development program, the research was conducted with partners at Indiana-based Purdue University, a member of the Sandia Academic Alliance Program. Researchers have made the source code and an EQUIPS workflow example available online.

To illustrate the diverse applications that can benefit from the EQUIPS workflow, the researchers demonstrated in the Nature Communications paper several uses for the new method: CT scans of graphite electrodes in lithium-ion batteries, most commonly found in electric vehicles, computers, medical equipment and aircraft; a scan of a woven composite being tested for thermal protection on atmospheric reentry vehicles, such as a rocket or a missile; and scans of both the human aorta and spine.

“What we really have done is say that you can take machine learning segmentation and not only just drop that in and get a single answer out, but you can objectively probe that machine learning segmentation to look at that ambiguity or uncertainty,” Roberts said. “Coming up with the uncertainty makes it more credible and gives more information to those needing to make decisions, whether in engineering, health care or other fields where high-consequence computer simulations are needed.”