ALBUQUERQUE, N.M. – Advanced computers may have beaten experts in chess and Go, but humans still excel at “one of these things is not like the others.”

Even toddlers excel at generalization, extrapolation and pattern recognition. But a computer algorithm trained only on pictures of red apples can’t recognize that a green apple is still an apple.

Now, a five-year effort to map, understand and mathematically re-create visual processing in the brain aims to close the computer-human gap in object recognition. Sandia National Laboratories is refereeing the brain-replication work of three university-led teams.

The Intelligence Advanced Research Projects Activity (IARPA) – the intelligence community’s version of DARPA – this year launched the Machine Intelligence from Cortical Networks (MICrONS) project, part of President Barack Obama’s BRAIN Initiative.

Three teams will map the complex circuitry in the visual cortex, where the brain makes sense of visual input from the eyes. By understanding how our brains see patterns and classify objects, researchers hope to improve how computer algorithms do the same. Such advances could improve how applications help find patterns in huge data sets and even lead to national security and intelligence applications.

The three university teams involved in the challenge include Carnegie Mellon University and the Wyss Institute for Biologically Inspired Engineering at Harvard University; Harvard University; and Baylor College of Medicine, the Allen Institute for Brain Science and Princeton University.

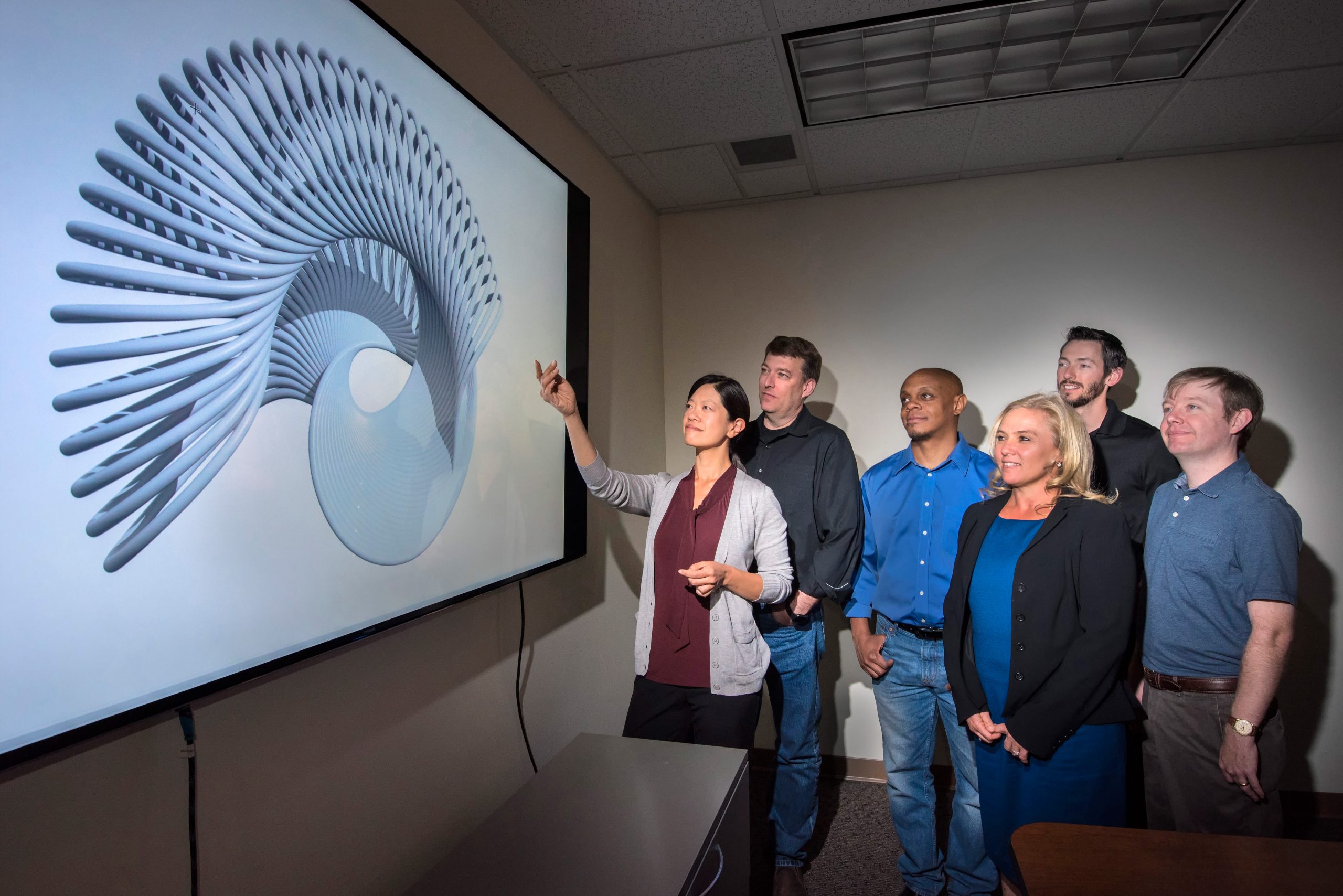

Researchers from Sandia led by Frances Chance, a computational neuroscientist, will test the resulting algorithms and evaluate whether they are on the right path.

“Research in neuroscience is a new area for Sandia, with potential to contribute to new scientific discoveries and mission needs in signal processing, high-consequence decision making, low-power high-performance computing and auto-associative memory,” said John Wagner, cognitive sciences manager at Sandia.

From neural networks to theoretical models to artificial intelligence

The teams each will use different techniques to map the network of interconnecting neurons that makes up the visual cortex. They’ll take those network maps and generate new models of how the brain works. From the models they hope to create vastly superior computer algorithms for object recognition.

“What is nice about the way the MICrONS program has been structured is that it is a beginning-to-end path. It goes from very basic neuroscience — anatomy at the nanometer and micrometer level — moving up into functional behavior of neural circuits in vivo, to theoretical models of how the brain works, and then machine learning models to do real-world applications. It’s the ideal of what brain-inspired algorithms should be, but it’s rarely done like this,” said Brad Aimone, a computational neuroscientist.

Aimone and his group will evaluate how much neuroscience the machine learning algorithms incorporate by focusing on the computational neuroscience models. These models are an important intermediate step. Each team will have to consolidate a lot of data into a few key insights. And the model has to be both representative of the brain and inspire advances in computer algorithms, said Aimone.

Augmented with a few experts from outside Sandia, Aimone’s group also will serve as a peer-review panel, comparing the university-led teams’ conclusions to what is already known about the brain. Additionally, Aimone’s group is applying validation and verification approaches, such as uncertainty quantification and sensitivity analysis, common in engineering models, to computational neuroscience. A big challenge is that the mathematics needed for even a simplistic explanation of neural networks is amazingly complex. His example neural models can include thousands of different parameters.

Putting artificial intelligence to the test

Sandia also will test the resulting machine learning algorithms. Beginning a year from now, the team will see whether the brain-inspired algorithms actually perform like human brains.

“What’s interesting is that you and I would separate objects the same way even though we just met and we’ve had completely different life experiences. Somehow the way our brain is interacting with the world, we’re forming some internal set of rules by which we classify things that we see. And all of us have a similar set of rules in our heads. Would brain-inspired machine learning algorithms automatically separate it the same way?” asked Chance.

Computer scientist Warren Davis heads the group that will evaluate whether the algorithms can sort images of computer-generated objects in the same manner as humans, termed similarity discrimination. The algorithms get one example and must pick out the other members of the class from thousands of other images.

Computer scientist Tim Shead is designing the test images according to known human classification rules. His group also will confirm that real people classify the drawings as expected before the evaluations begin.

Next, the algorithms will have to sort an array of test images into classes. They will have to sort these images the same way humans do, even though the algorithms have never seen these images before. Finally, at the end of the five years, the teams will pick a difficult, real-world task, like creating captions for photos, which show off their individual algorithm’s strengths. The algorithms also will be tested on their ability to recognize objects that have been stretched, rotated, partially hidden or otherwise modified.

Aimone said, “MICrONS is like the Hubble telescope. We’re building better tools to see things that we were unable to see before and we’re trying to come up with theories to explain what we’ve observed. Just like Hubble didn’t explain everything about the universe, MICrONS isn’t going to solve the brain. The hope is it will tell us something that will make our models better so we could use them to do interesting things.”