ALBUQUERQUE, N.M. — Fifty million artificial neurons — a number roughly equivalent to the brain of a small mammal — were delivered from Portland, Oregon-based Intel Corp. to Sandia National Laboratories last month, said Sandia project leader Craig Vineyard.

The neurons will be assembled to advance a relatively new kind of computing, called neuromorphic, based on the principles of the human brain. Its artificial components pass information in a manner similar to the action of living neurons, electrically pulsing only when a synapse in a complex circuit has absorbed enough charge to produce an electrical spike.

“With a neuromorphic computer of this scale,” Vineyard said, “we have a new tool to understand how brain-based computers are able to do impressive feats that we cannot currently do with ordinary computers.”

Improved algorithms and computer circuitry can create wider applications for neuromorphic computers, said Vineyard.

Sandia manager of cognitive and emerging computing John Wagner said, “This very large neural computer will let us test how brain-inspired processors use information at increasingly realistic scales as they come to actually approximate the processing power of brains. We expect to see new capabilities emerge as we use more and more neurons to solve a problem — just like happens in nature.”

“The shipment is the first in a three-year series of collaborator test beds that will house increasingly sophisticated neural computers,” said Scott Collis, director of Sandia’s Center for Computing Research. “If research efforts prove successful, the total number of experimental neurons in the final model could reach 1 billion or more.”

Said Mike Davies, director of Intel’s Neuromorphic Computing Lab, “As high demand and evolving workloads become increasingly important for our national security, Intel’s collaboration with Sandia will provide the tools to successfully scale neuromorphic computing solutions to an unprecedented level. Sandia’s initial work will lay the foundation for the later phase of our collaboration, which will include prototyping the software, algorithms and architectures in support of next-generation large-scale neuromorphic research systems.”

Machine-learning achievements, of which neuromorphic computing plays a part, already include using neural circuits to make critical decisions for self-driving cars, classifying vapors and aerosols in airports and identifying an individual’s face out of a mass of random images. But experts in the field think such capabilities are only a start in improving machine learning in more complex fields, like remote sensing and intelligence analysis.

Added Wagner, “We have been surprised that neural computers are not only good at processing images and data streams. There is growing evidence that they excel at computational physics simulations and other numerical algorithms.”

Sandia artificial intelligence researcher Brad Aimone said, “In terms of neural-inspired computing uses, we are still in the infancy of the explosion to come.”

Explosion of uses to come from neural-inspired computing

Intel, a commercial computer chipmaker, and Sandia, a national security lab, aim to explore the effects of increased scale on the burgeoning field of artificial intelligence in commercial and defense areas, respectively.

For both areas, “what’s still to be determined are the extent of problems that can be solved by AI and the best algorithm and architectures to use to solve them,” Vineyard said. “We can now build these systems at scale, but which algorithms and architectures would best capitalize on the fabrication advances are unknown.”

Modeled on the complex linking of neurons in the human brain, neuromorphic computers eventually may use far less electrical power and weigh much less than today’s personal computers.

Computers with artificial neurons act through mathematical approximations, rather than forcing a problem’s solution into conventional computing paths that may contain a forest of inessential steps, said Vineyard.

But machinery based on circuitry that involves strong electrical spikes, like the biological brain’s operation, has been delayed because “many algorithms that exist today are based upon developers making efficient use of mainstream computer architectures,” said Vineyard. “But brains operate differently, more like the interconnected graphs of airline routes, than any yes-no electronic circuit typically used in computing. So, a lot of brain-like algorithms struggle because current computers aren’t designed to execute them.”

Neural algorithms on neural computers may just hum along

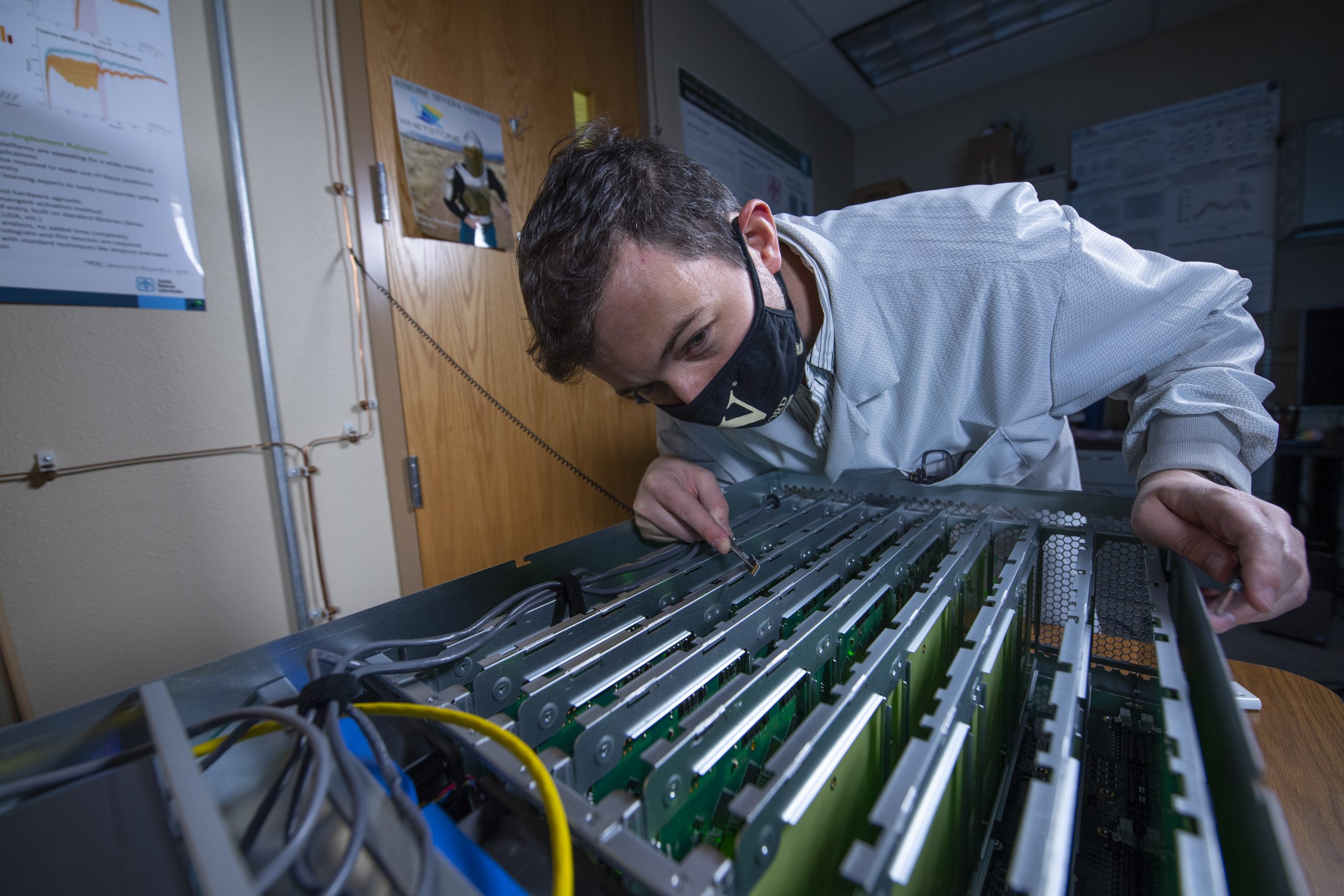

An additional aim for Vineyard and colleagues, who already have small prototypes of two and eight chips in Intel’s most advanced class of neuron sets, called Loihi, is to add enough neurons that algorithms based on brain-like logic can be employed on brain-like computer architectures.

By using emerging brain-inspired architectures to do research and development, “we’ll be providing new approaches and abilities from which novel algorithms can emerge,” said Vineyard.

The work is funded by the National Nuclear Security Administration’s Advanced Simulation and Computing program.